In 1994 — four years prior to his death — Jacob Cohen, psychological statistician and ping pong aficionado, published an influential pamphlet, “The earth is round (p < .05)“, that psychologists cite as a mantra when they contemplate their discipline (which according to Google Scholar did not happen more than 3,576 times thus far). In one of the most underappreciated acts of speciesism in psychology, Cohen suggested that Martians cannot be members of Congress. Also, importantly, he urged psychologists to “finally rely, as has been done in all the older sciences, on replication” (p. 997). Naturally, in keeping with the discipline’s traditions, Cohen had never done any replications himself, and thus became psychology’s first armchair replicationist.

It took about twenty years of preparation (Makel, Plucker, & Hegarty, 2012) for a handful of scientists to heed Cohen’s advice (e.g., Donnellan, Lucas, & Cesario, 2015; Gomes & McCullough, 2015; Ong, IJzerman, & Leung, 2015; Ritchie, Wiseman, & French, 2012). For the first time in the discipline’s history, psychologists started to “rely on replications” – only to be let down. Individual efforts to replicate studies and results of collaborations by multiple laboratories, such as Many Labs (Ebersole et al., in press; Klein et al., 2014), all point to reliable replication of the inability to successfully replicate studies. Perhaps the only other phenomenon that has successfully replicated is writer’s block (Didden, Sigafoos, O’Reilly, Lancioni, & Sturmey, 2007), though luckily this has not affected the critics of open science practices.

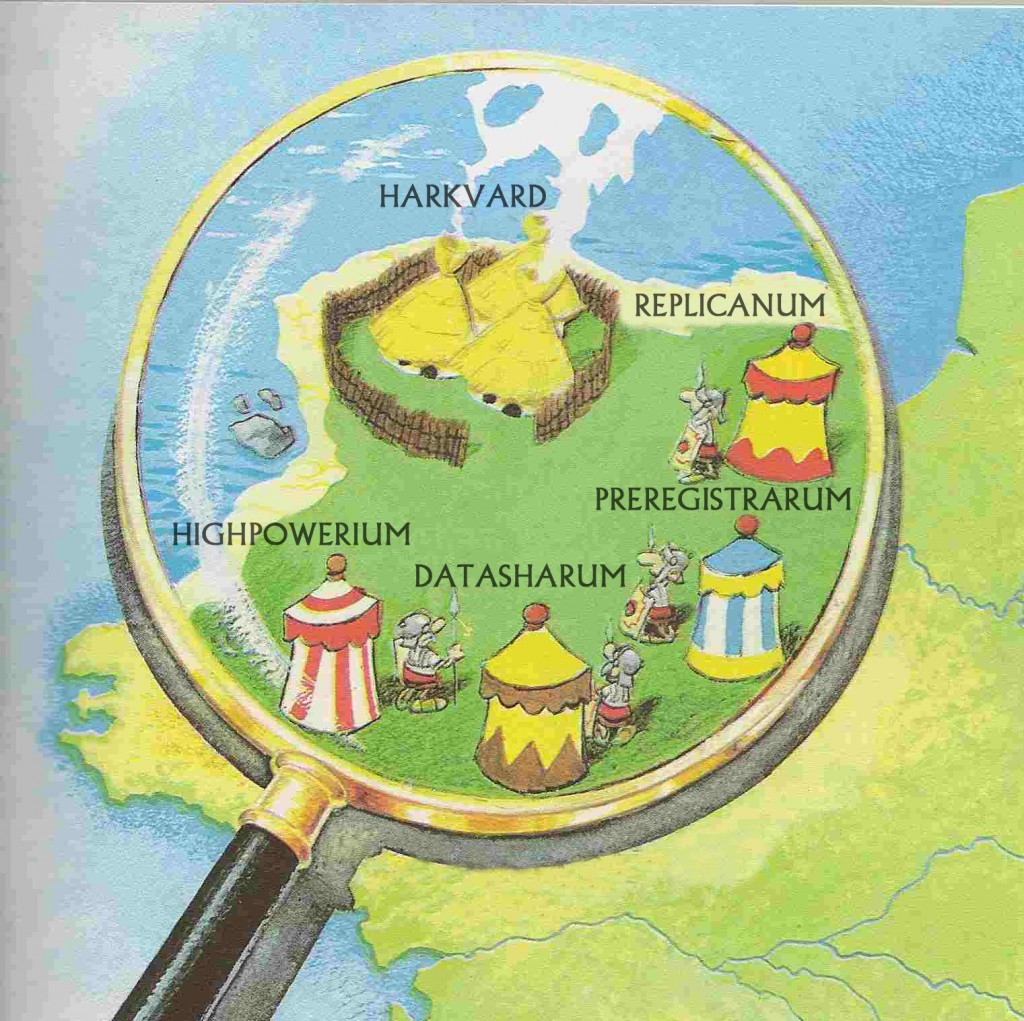

The year is 2015 A.D. Psychology is entirely occupied by replicationists. Well, not entirely… One small but influential group of indomitable psychologists still holds out against the invaders. And life is not easy for the psychology legionaries who garrison the fortified camps of Highpowerium, Datasharum, Preregistrarum, and Replicanum…

In an attempt to estimate the spread of this troubling defect, a collective of 270 researchers from 125 institutions called the Open Science Collaboration (2012, 2015) repeated the procedures of a quasi-random sample of 100 experimental and correlational studies published in the Journal of Experimental Psychology: Learning, Memory, and Cognition, the Journal of Personality and Social Psychology, and Psychological Science in 2008. Their mortifying conclusions: Although 97% of the original studies reported that they discovered a true effect, only 36% of the OSC replications found a significant result. Some nifty researchers were quick to call this the final proof for psychology’s “replication crisis” which, in essence, describes a crisis of psychologists’ ability to find statistically significant effects when they are not the first to look for them.

In a commentary on the OSC study, Harvard psychologist Daniel Gilbert, together with his colleagues, political scientists Gary King and Stephen Pettigrew, and University of Virginia psychologist Timothy Wilson (2016a, 2016b, 2016c), offer a different, and arguably more plausible account. Although many scientist-bloggers (e.g., Bishop, 2016; Funder, 2016; Gelman, 2016; Lakens, 2016a, 2016b; Nosek & Gilbert, 2016; Simonsohn, 2016; Srivastra, 2016; Vazire, 2016; Zwaan, 2016) in concert with members of the OSC (Anderson et al., 2016) were quick to dismiss Gilbert et al.’s criticism, their devastating critique demands we address the one question that makes the replicationists uncomfortable: If a procedure even differs slightly from the original it tries to mimic — such as the population participants are sampled from, stimulus materials, prevailing neutrino winds — is the expectation of a successful replication actually warranted?

Much like the Open Science Collaboration, Rick Deckard hunts

high-powered replicants.

Image credit: Bill Lile (CC BY-NC-ND)

This is corroborated by a Bayesian reanalysis of the OSC study (Etz & Vandekerckhove, 2016) suggesting its purported failure to replicate many target effects can be adequately explained by dissimilarities in sample sizes of originals and replications (unlike Martians, it is still up for debate whether Bayesians can become members of Congress). Thus, it can be concluded that the actual crisis does not lie in the replication of statistically significant effects themselves, but in the reproduction fidelity of the circumstances under which they were observed the first time.

And that crisis ends now. Dr. Primestein has been working relentlessly since the release of Gilbert et al.’s analysis and is finally ready to present the remedy to solve psychology’s actual replication crisis once and for all: The Replication Mirror – an invaluable tool that will replicate any study all by itself! Using the Replication Mirror could not be simpler: Just put it up on a wall in your lab space, run your subjects in front of the mirror, and your study will replicate automagically.

“Mirror, mirror on the wall, who has the greatest R-INDEX of them all?” With Dr. Primestein’s Replication Mirror, the answer will be YOU!

Image credit: Christine Vaufrey (CC BY; derivative)

Are you one of those poor researchers whose procedures fail to be reproduced by others? Don’t worry: Get your very own Replication Mirror and your personal replication crisis will resolve instantly! Concerned that your subject pool might be exhausted and you won’t get a similar sample size in your replication? One Replication Mirror will multiply almost anything by a factor of two: sample sizes, statistical power, the number of studies — and eventually, the number of publications on your CV.

Many labs are already equipped with one-way mirrors which makes upgrading those with Dr. Primestein’s Replication Mirror cheap and convenient. But free replications are not all the Replication Mirror can give you! Did you run a study, but the p-value is only at .40 although you know the effect to be true? There’s no need for the annoying effort of p-hacking, outlier removal, and hide-and-seek moderator analyses. Simply hold your SPSS output in front of the Replication Mirror and watch the p-value magically transform into .04! (R support coming soon)

If one replication is not enough, just mount two Replication Mirrors on opposite walls. Facing one of them and saying “Kahneman!” five times will summon a friendly old man that runs an infinite number, a “daisy chain” of replications (Kahneman, 2012), so you don’t have to wait for some incompetent second stringers to botch replication attempts of your work. Don’t be despicable and go replicable — order your very own Replication Mirror today!

“Kahneman! Kahneman! Kahneman! Kahneman! Kahneman!”

Image credit: Eirik Solheim (CC BY-SA; derivative)

* Comes with a free copy of the movie “Replicable Me”!

References

Anderson, C. J., Bahnik, S., Barnett-Cowan, M., Bosco, F. A., Chandler, J., Chartier, C. R., … Zuni, K. (2016). Response to comment on “Estimating the reproducibility of psychological science.” Science, 351(6277), 1037–1037. doi: 10.1126/science.aad9163 [.pdf]

Bishop, D. (2016). There is a reproducibility crisis in psychology and we need to act on it. Retrieved from http://deevybee.blogspot.com [Link]

Cohen, J. (1994). The earth is round (p < .05). American Psychologist, 49(12), 997–1003. doi: 10.1037/0003-066X.49.12.997 [.pdf]

Didden, R., Sigafoos, J., O’Reilly, M. F., Lancioni, G. E., & Sturmey, P. (2007). A multisite cross-cultural replication of Upper’s (1974) unsuccessful self-treatment of writer’s block. Journal of Applied Behavior Analysis, 40(4), 773–773. doi: 10.1901/jaba.2007.773 [.pdf]

Donnellan, M. B., Lucas, R. E., & Cesario, J. (2015). On the association between loneliness and bathing habits: Nine replications of Bargh and Shalev (2012) Study 1. Emotion, 15(1), 109–119. doi: 10.1037/a0036079 [.pdf]

Ebersole, C. R., Atherton, O. E., Belanger, A. L., Skulborstad, H. M., Allen, J. M., Banks, J. B., … Nosek, B. A. (in press). Many Labs 3: Evaluating participant pool quality across the academic semester via replication. Journal of Experimental Social Psychology. [.pdf]

Etz, A., & Vandekerckhove, J. (2016). A Bayesian perspective on the Reproducibility Project: Psychology. PLOS ONE, 11(2), e0149794. doi: 10.1371/journal.pone.0149794 [.pdf]

Funder, D. C. (2016). What if Gilbert is right? Retrieved from https://funderstorms.wordpress.com [Link]

Gelman, A. (2016). Replication crisis crisis: Why I continue in my “pessimistic conclusions about reproducibility.” Retrieved from http://www.andrewgelman.com [Link]

Gilbert, D. T., King, G., Pettigrew, S., & Wilson, T. D. (2016a). Comment on “Estimating the reproducibility of psychological science.” Science, 351(6277), 1037. doi: 10.1126/science.aad7243 [.pdf]

Gilbert, D. T., King, G., Pettigrew, S., & Wilson, T. D. (2016b). A response to the reply to our technical comment on “Estimating the reproducibility of psychological science.” Retrieved from http://projects.iq.harvard.edu/psychology-replications/ [.pdf]

Gilbert, D. T., King, G., Pettigrew, S., & Wilson, T. D. (2016c). More on “Estimating the reproducibility of psychological science.” Retrieved from http://projects.iq.harvard.edu [.pdf]

Gomes, C. M., & McCullough, M. E. (2015). The effects of implicit religious primes on dictator game allocations: A preregistered replication experiment. Journal of Experimental Psychology: General, 144(6), e94–e104. doi: 10.1037/xge0000027 [.pdf]

Kahneman, D. (2012). A proposal to deal with questions about priming effects. Retrieved from http://www.nature.com [.pdf]

Klein, R. A., Ratliff, K. A., Vianello, M., Adams, R. B., Bahnik, S., Bernstein, M. J., … Nosek, B. A. (2014). Investigating variation in replicability: A “many labs” replication project. Social Psychology, 45(3), 142–152. doi: 10.1027/1864-9335/a000178 [.pdf]

Lakens, D. (2016a). The difference between a confidence interval and a capture percentage. Retrieved from http://daniellakens.blogspot.com [Link]

Lakens, D. (2016b). The statistical conclusions in Gilbert et al (2016) are completely invalid. Retrieved from http://daniellakens.blogspot.com [Link]

Makel, M. C., Plucker, J. A., & Hegarty, B. (2012). Replications in psychology research: How often do they really occur? Perspectives on Psychological Science, 7(6), 537–542. doi: 10.1177/1745691612460688

Nosek, B. A., & Gilbert, E. (2016). Let’s not mischaracterize replication studies: authors. Retrieved from http://www.retractionwatch.com [Link]

Ong, L. S., IJzerman, H., & Leung, A. K. Y. (2015). Is comfort food really good for the soul? A replication of Troisi and Gabriel’s (2011) Study 2. Frontiers in Psychology, 6, 314. doi: 10.3389/fpsyg.2015.00314 [Link]

Open Science Collaboration. (2012). An open, large-scale, collaborative effort to estimate the reproducibility of psychological science. Perspectives on Psychological Science, 7(6), 657–660. doi: 10.1177/1745691612462588 [.pdf]

Open Science Collaboration. (2015). Estimating the reproducibility of psychological science. Science, 349(6251), aac4716–aac4716. doi: 10.1126/science.aac4716 [.pdf]

Ritchie, S. J., Wiseman, R., & French, C. C. (2012). Failing the future: Three unsuccessful attempts to replicate Bem’s “retroactive facilitation of recall” effect. PLoS ONE, 7(3). doi: 10.1371/journal.pone.0033423 [.pdf]

Simonsohn, U. (2016). Evaluating replications: 40% full ≠ 60% empty. 2016. Retrieved from http://www.datacolada.org [Link]

Srivastava, S. (2016). Evaluating a new critique of the Reproducibility Project. Retrieved from https://hardsci.wordpress.com [Link]

Vazire, S. (2016). Is this what it sounds like when the doves cry? Retrieved from http://sometimesimwrong.typepad.com [Link]

Zwaan, R. (2016). Why continue to elicit false confessions from the data? Retrieved from http://rolfzwaan.blogspot.com [Link]